The case for a ChatGPT usage and adoption policy for public sector administration

Author(s):

Anirban Kundu

Disclaimer: The French version of this editorial has been auto-translated and has not been approved by the author.

Chat Generative Pre-Trained Transformer (“ChatGPT”) is a large language model (LLM) based chatbot developed by OpenAI (a San Francisco-based AI research laboratory) which reported 100 million users in just 2 months and holds the potential to deliver value across several industries. Versions that followed included GPT-4, and other variants for different functions, e.g., ChatClimate (an LLM-based tool for climate conversations developed by University of Zurich researchers), BloombergGPT (a LLM model for finance developed by Bloomberg). Moreover, industry analysis has also predicted ChatGPT to play diverse roles across marketing, operations, R&D, engineering and legal sectors, with a potential to add $ 2.6-4.4 trillion in market value. Considering how ChatGPT generates analysis and information in a time efficient manner, its application in the public sector is an interesting development to watch for–making the case for an effective usage policy all the more important.

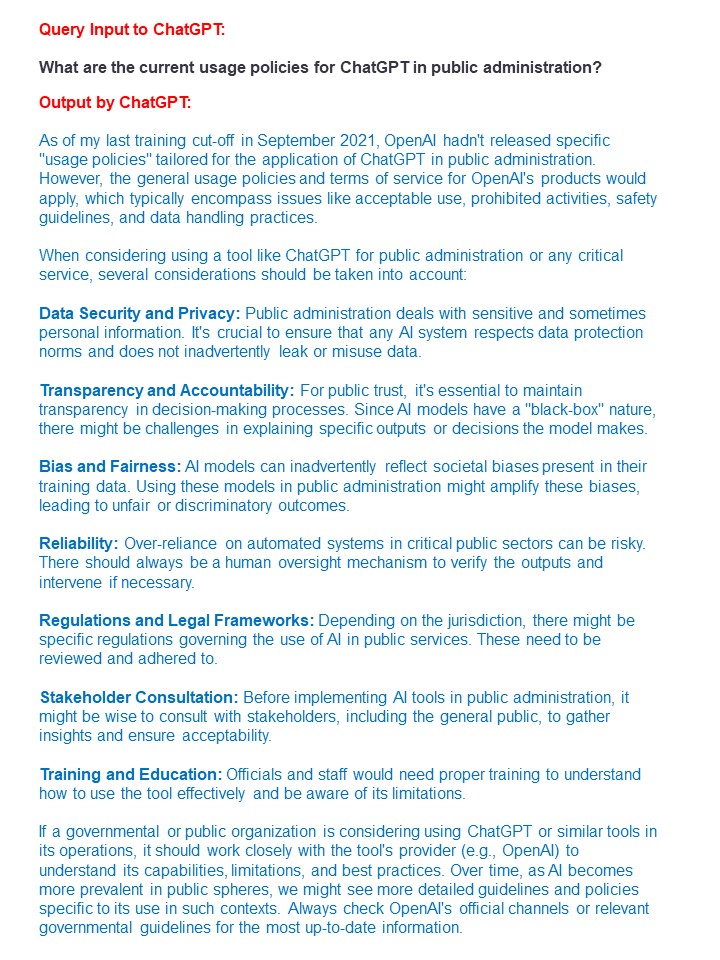

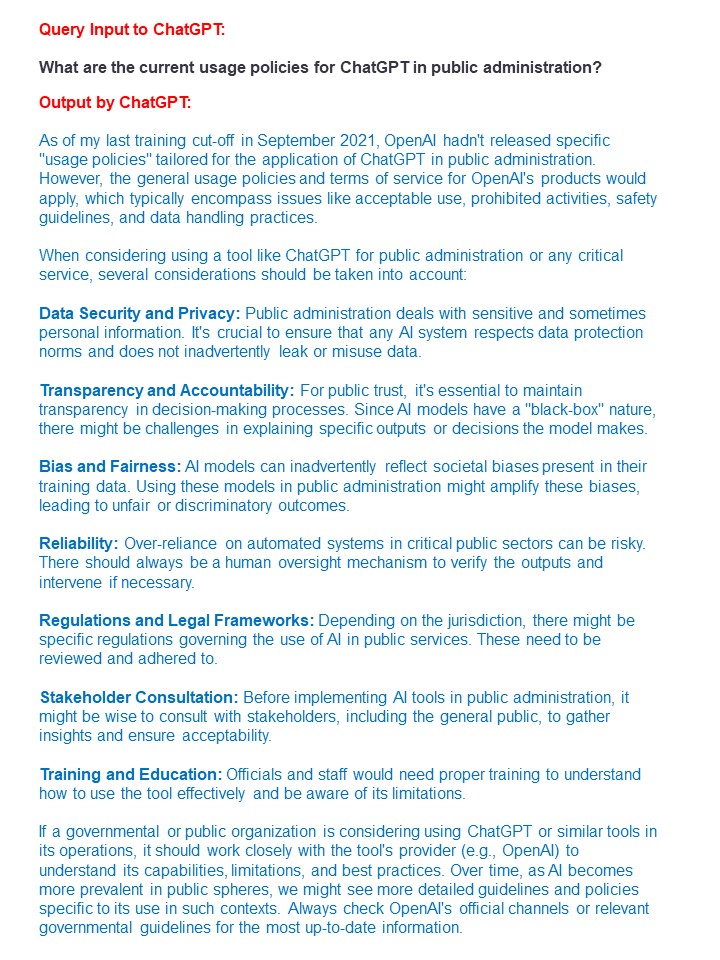

However, policy precedes application: making the case for an effective ChatGPT usage policy in public administration is essential to adoption. Public administration is governed by transparency, accountability, agility, and a society-focused approach. Accordingly, a ChatGPT policy should consider such nuances in the light of the tool’s advantages, risks, and limitations. These include a) transparency, accountability, and bias: this is challenging as the source code of LLMs such as ChatGPT are not very well understood, b) efficiency: the remarkable operational efficiency of ChatGPT is overshadowed by the need of considerable computational power, c) quality of generated output: while ChatGPT builds on a comprehensive training database to generate answers, not all answers may be similar in depth and quality, with reliability being governed by the complexity of the input query (Note: GPT-3 was trained on 570 GB of training data, thus GPT-4 would likely be trained on an even larger data volume, however, the specifics haven’t been disclosed by OpenAI). Surprisingly, upon asking ChatGPT, a lot of these points came to light (please refer to Schematic 1). While the tool does offer efficiency, data processing power, and linguistic support, its challenges over ethical concerns, security, misinformation, and the need for due diligence emphasize the need for careful investigation for public administration use.

Schematic 1. Conversation with ChatGPT (GPT-4) on readiness of the tool for public policy and administration use (for image clarity, instead of screenshots of the ChatGPT console, the generated replies have been pasted and shared in image form)

A discussion among US government leaders on ChatGPT adoption in public administration provides important light on adoption: a) the lack of policy for ChatGPT usage and the varying acceptability of use by government agencies, b) the need to establish guardrails to prevent unnecessary dependency, c) the need of engaging stakeholders and innovation experts to articulate effective AI policy, d) the need of ensuring transparency in use and alignment with privacy and IP rights. Furthermore, in Canada, news reports indicate the provincial government’s emphasis on effective guardrails on the use of AI in the public sector, vouching for transparent and trustworthy AI use. Overall, while government agencies acknowledge ChatGPT (and broadly LLMs) as crucial to simplify operations and drive innovation, they also underline that ChatGPT usage in public administration is in a rather developing stage and needs informed assessment to craft effective policies.

Some ways in which informed assessment may be achieved include: a) ChatGPT governance which involves the role of multilateral partnerships to inform best practices across the value chain via i) facilitating understanding of risks and opportunities, ii) setting effective standards, iii) promoting access to cutting-edge tools, and iv) furthering AI safety research (Source: Google DeepMind); b) Role of public private partnerships to articulate an effective AI risk management framework and recommendations to adopt; c) Need of effective legal and regulatory frameworks that consider transparency and ethical considerations of ChatGPT output, d) Funding more research into ChatGPT and LLM application, and the nature and comparative performance of different tools across different scenarios relevant to public administration. In addition, published directives provide helpful guidance, E.g., a) the Government of Canada’s Directive on Automated Decision-Making, whose objective (in a nutshell) involves ensuring low risk while deploying AI-informed decisions, thereby also fostering data-driven decision making, emphasizing upon thorough impact assessments of AI algorithms and making the necessary data available to the public; b) The OECD’s AI Policy Observatory (OECD.AI) which consolidates stakeholder inputs and research to provide evidence-based policy analyses on a host of topics such as AI accountability, technological and socio-economic considerations, challenges and opportunities mapping, regulatory landscape etc. Finally, developing literacy of government staff in ChatGPT (and LLM) ethical use, transparency, and application is equally important, and can be implemented by a) training, learning, and development efforts, b) organizing stakeholder interaction sessions to capture stakeholder input on ChatGPT use and guardrails, c) continuously developing improved input queries, d) being cognizant of usage rules and restrictions, e) ensuring pilot testing, monitoring, and evaluation during execution, f) conducting internal AI audits (Adapted from UNESCO’s ChatGPT start guide in higher education).

The LLM ecosystem and its tools such as ChatGPT, are here to stay. Cognizant that such ecosystems and tools offer a wide away of operational benefits, public governance should however, take a step back, critically assess, and identify how most effectively to apply ChatGPT (and LLMs in general), while ensuring the human intellect, evidence-based decision making, and transparency are not diluted in public policy making and governance. Moreover, such an effort would be instrumental to develop usage policies and keep pace with the integration of these tools into modern day data collection, analysis, and decision making.